(Last updated: 10/17/2021 | PERSONAL OPINION)

Since I left SAS Institute, an analytical software vendor, I have worked in two analytics heavy shops, both in process of digitizing their services and viewing data, analytics and AI/ML (DnA) as the core enabler. The size, industry, culture and approach of the two could not be more different, yet their wants and needs for analytics couldn’t be more similar. Hence, I’m tempted to generalize from my sample of two here.

Experience and Operation

For any enterprise, these are the two easy pieces with opposite objective functions: For customer experiences, you want to maximize. For operations, you want to minimize (the friction and cost).

Digital

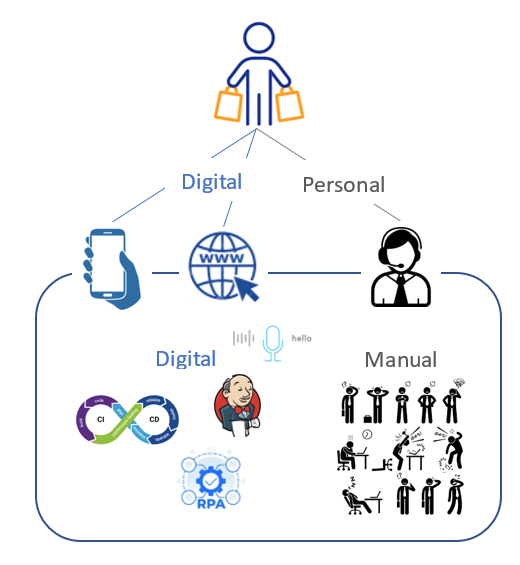

There are two aspects to digitization of an enterprise: external facing is the digital channels to serve customers’ needs and internal facing is the digital ways of working.

Analytical

With digitization comes the overabundance of data, which enables and necessitate the line of work of analytics and insights generation. When data comes in too fast and too much, human driven analytics must be aided by or cede to model driven AI/ML.

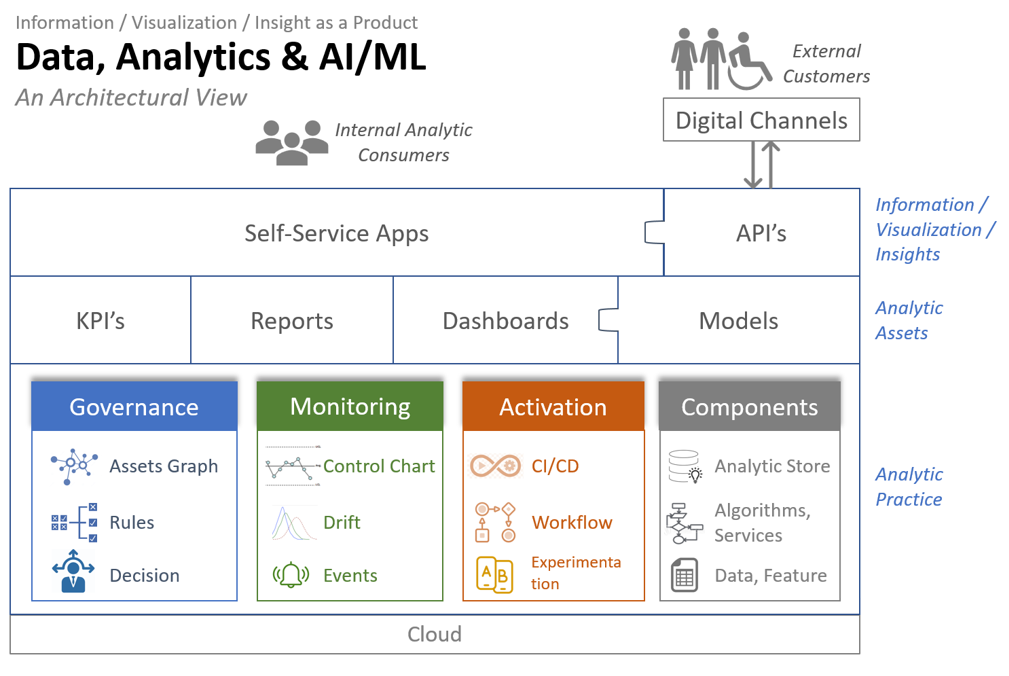

Every digitized enterprise needs data, analytics and AI/ML, is there a general architecture that applies to all?

Assets

Metrics, KPI’s, reports, dashboards and models are the typical analytic assets an enterprise use to help improve business processes. Their formats range from numbers to tables to texts to visualizations, and their nature range from descriptive to predictive to prescriptive.

Delivery

“Self-service” is the most wanted feature asked for by internal business partners. Instead of getting a static image depicting a specific configuration, business partners want interactive visualization app with which they can conduct exploratory and investigative study of their own, by tweaking the configurations and generating visualizations on the fly. Same idea applies to other type of assets as well as the underlying data. They want mechanism which enable them to obtain data or produce assets by themselves.

For non-human consumers, such as web/mobile apps, API is THE way to serve analytical insights.

Practice

What differentiate one enterprise from others is its analytic practice to produce and deliver analytic assets. Is the KPI calculated once a year in Excel by a business analyst, or is it produced automatically daily by a pipeline and feed into a control chart which monitors, alerts and triggers appropriate actions?

Without analytic practice, an enterprise can still produce various analytic assets, though it cannot scale up the pace and quality of assets produced.

Governance

Governance is not about compliance and policing, as most enterprises approach it today, rather it’s about decisioning in aim of effective management and use of assets.

As the foundation of good governance is the assets graph: taxonomy, ontology, lineage, catalogue, glossaries… Tools that capture the semantics of things as well as the relationship among things.

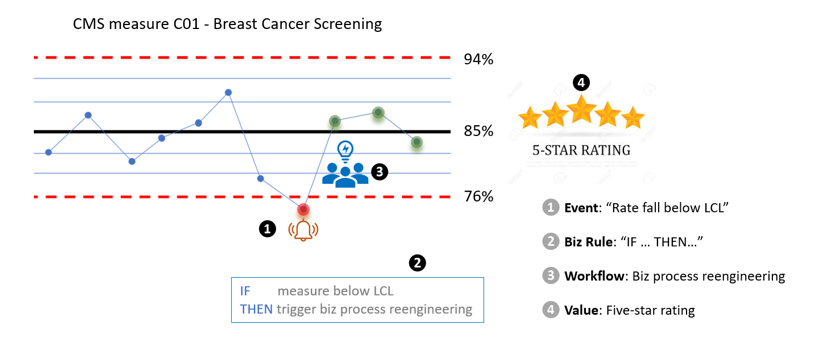

Once the assets graph is in place, a dynamic layer can be built on top: alerts that capture exceptions, rules that prescribe how to handle things and ultimately decision workflows with complex logic.

The semantic layer models the environment in which analytics operates and the dynamic layer on top ensures the alignment between business processes and objectives.

Monitoring

Monitoring is the nerve system of an enterprise’s analytic function: Events captures the raw sensory signals. Alerts/Messages triages events based on semantics and rules. Control charts exert statistical process control over KPI’s…

Activation

There are different levels of activation corresponding to different roles in the analytics ecosystem: at the lower level, CI/CD enables data engineer and data scientists commit and deploy their changes to data pipelines or models; One level up, inference workflows produce insights on schedule or triggered by events; On the top level, business partners and consumers consumes insights via self-service apps or API’s.

Whether it’s the data engineers running unit test, or the data scientist tuning model parameters or the outcome researchers doing A/B testing, experimentation is a crucial step before activation.

Components

A proven mechanism to deploy and run batch models, an efficient way of windowing on streaming data, a curated dataset that provide 360֯ view of customers… These are all examples of components of the analytic store which facilitate reuse, promote quality consistency and foster innovation.

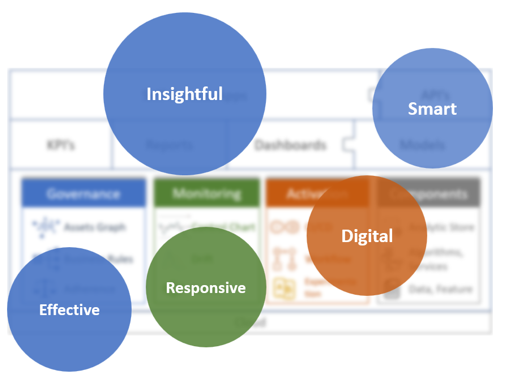

Effective, Responsive, Automatic, Smart and Insightful

To be efficient, nimble and innovative, an enterprise needs all these must-have traits, and different components of the analytic practice as well as other layers of the reference architecture contribute to different traits of a digital enterprise.

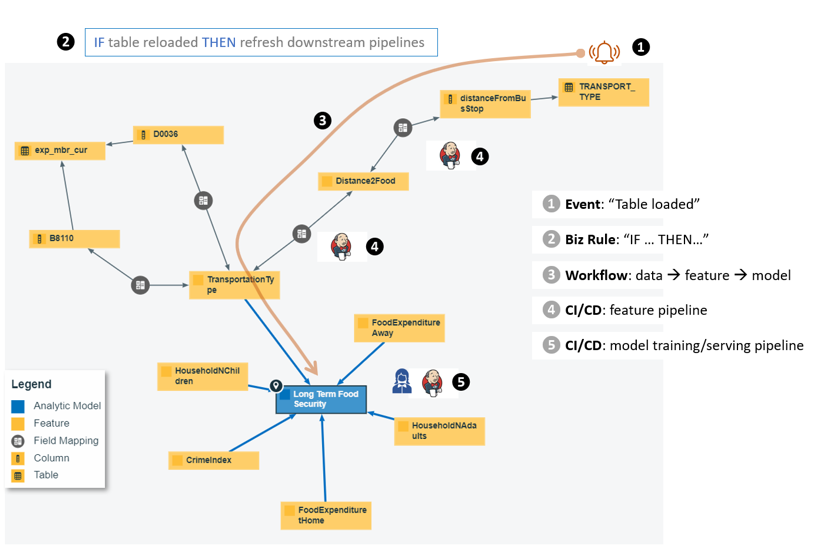

Use case #1, Dynamic behaviors on top of the static assets graph

It takes a village to build an analytic model. Multiple data sources, pipelines for feature engineering, model training and model serving, ad hoc task hyper parameter tuning… It’s already a feast to capture the assets, processes and interdependencies into a knowledge graph, but we can do better! With the help of events, business rules and decisioning as well as activating workflows, we can refresh the model as soon as it has been detected that the underlying distribution of data has drifted.

Use Case #2, KPI monitoring

Center for Medicare and Medicaid Services(CMS) is the government agency which defines, measures and reports around 80 health care quality metrics. All insurance providers are required to calculate and report on the set of metrics annually. If you have the SQL or Python code written, why not schedule it to run weekly or daily? Why not monitor the metric and intervene as soon as it dips below the lower control limit?

Cloud

The appeal of cloud to enterprises boils down to the simple fact that, as organizations grounded in specific problem domains that’s not hardware or software (analytics being a special software), they don’t want to deal with hardware or software. On public clouds, storage, compute, AI/ML, events, messages, streaming… everything is a service. With these services managed by AWS, the enterprise can focus on what really matters: data, features, knowledge graphs, models…

I saw a small team in a mid-sized enterprise trying to build cloud data science platform from scratch on AWS, ignoring managed services such as AWS/Sagemaker, which totally missed the point of moving to the cloud. Needless to say, the team didn’t succeed and I lost my patients and jumped ship.

Segmentation, commoditization, democratization, and specialization

Because of the servicefication of various components, the analytics ecosystem has been segmented into finely grained granularities. Gone are the days of gigantic appliances or mammoth vertical solutions. If I can quickly stack up a data ingestion service, a SQL query engine and a visualization layer, all being the best-of-class in their respective niche, why do I want to buy so-called BI solution such as Cognos?

Big players often give away tools/components for free to drive adoption of their managed services. In other words, these otherwise lucrative tools/components have been intentionally commoditized.

Fine-grained segmentation lowered the barriers to entry while reducing the efforts for perfection. It’s commonplace nowadays for a single-maintainer to produce a successful tool/component. Segmentation enticed innovation.

Both commoditization and open-source innovation expand accesses, the democratization, of analytics.

All above leave but one option for those who want to sell analytic components/services: specialization. Find a small area which is not yet commoditized or disrupted by open source, offer the best API and services that play well with the rest of the cloud. Conversely, for those who succeeded in specialization, do one thing and do it even better. I’m looking at you Tableau, be the visualization layer that you’re good at, nobody will use you for AI/ML.

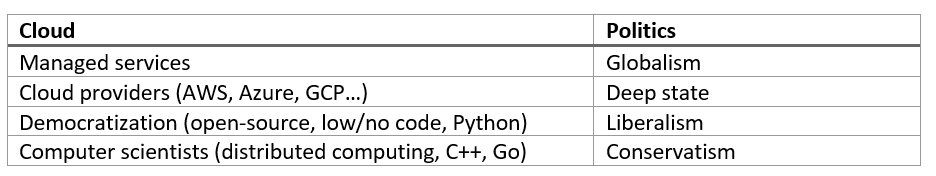

Liberalism, conservatism and deep state

I was frustrated seeing data scientists, those kind who know not enough computer science and filled with see-how-deep-is-my-learning hubris, are willing to do only one thing for their CPU and/or memory bound inferencing jobs: “liberally” throwing more and beefier compute instances at their jobs, refusing to do what an “conservative” computer scientist considers must do: profiling, optimizing the flow and reducing the memory footprint of, none other than your own model and inference code!

Since I mentioned liberal and conservative, it’s quite amusing to compare the cloud ecosystem with current day politics.

I’m struggling to find the “Trumpism” counterpart in tech: Anti-globalization (tends to do everything inside their walled garden as much as profits permits), appealing more to emotion than reason (high price for what it’s worth), x-supremacy (we’re deh best)… Apple?

Rants and jokes aside, a mature analytics organization strives to play the cloud game smart: Use managed services when it makes sense and curb associates’ tendency of reaching for more storage and compute rather than optimizing their code (welfare vs work).

In Summary

By putting forth the reference cloud native analytic architecture of the digital enterprise, I hope vendors and consumers/practitioners of analytics alike realize the new paradigm of app development that is architecting with managed cloud services, the new opportunities of revamping analytic practices which drive nimble and smart organizational behaviors and the new challenges comes with the new architecture, services and AI/ML technologies.

Reference:

- Law of the tech: commoditize your complement

- Events, Assets graph and Workflow in the enterprise analytic architecture correspond nicely with the episodic, semantic and procedural memory.